Even though text-generation models are good at generating content, they sometimes need to improve in returning facts. This happens because of the way they are trained. Retrieval Augmented Generation(RAG) techniques have been introduced to address this issue by fetching context from a knowledge base.

Corrective RAG is an additional step to ensure the model sticks to the information it gets. It corrects factual inaccuracies in real-time by ranking options based on how likely they fit the model and match the retrieved info. This helps make accurate corrections before finishing the text.

Corrective Retrieval Augmented Generation paper

Top Level Structure

CRAG has three main parts:

- Generative Model: It generates an initial sequence.

- Retrieval Model: It retrieves context based on the initial sequence from the knowledge base.

- Retrieval Evaluator: It manages the back-and-forth between the generator and retriever, ranks options, and decides the final sequence to be given as output.

In CRAG, the Retrieval Evaluator links the Retriever and Generator. It keeps track of the text created, asks the generator for more, gets knowledge with updated info, scores options for both text fit and accuracy, and chooses the best one to add to the output at each step.

Implementation

Here is the Colab link

Implementation will include giving ratings/scores to retrieved documents based on how well they answer a question:

For Correct documents -

- If at least one document is relevant, it moves on to creating text

- Before creating text, it cleans up the knowledge

- This breaks down the document into “knowledge strips”

- It rates each strip and gets rid of ones that don’t matter

For Ambiguous or Incorrect documents -

- If all documents need to be more relevant or are unsure, the method looks for more information.

- It uses a web search to add more details to what it found

- The diagram in the paper also shows that they might change the question to get better results.

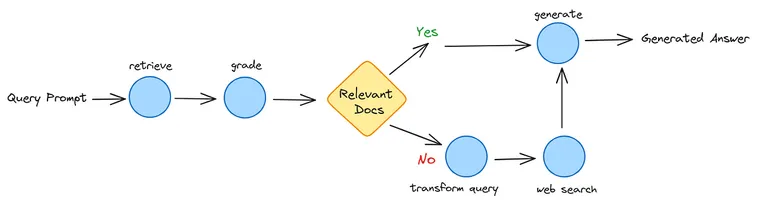

We will implement CRAG using Langgraph and LanceDB. LanceDB for lightning-fast retrieval from the knowledge base. Here is the flow of how it will work.

The next step to implement this flow will be:

- Retrieve Relevant Documents

- If a Relevant Document is not found, Go for Supplement Retrieval with Web search(using Tavily API).

- Query Re-writing to optimize the query for Web search.

Here is some pseudocode; for a full implementation, check the Colab.

Building Retriever

We will use Jay Alammer’s articles on Transforms as a Knowledge.

Define Knowledge base using LanceDB

Embeddings Extraction

We will use the OpenAI embeddings function and insert them in the LanceDB knowledge base for fetching context to extract the embeddings of documents.

Define Langgraph

We will define a graph for building Langgraph by adding nodes and edges as in the above flow diagram.

This Graph will include five nodes: Document Retriever, Generator, Document Grader, Query Transformer, and Web Search, and 1 Edge will be Decide to Generate.

The following graph shows the flow shown in the diagram.

Here is the generated answer output, illustrating each node’s functioning and their decisions, ultimately resulting in the final generated output.

Checkout the Colab for Implementation

Challenges and Future Works

No Doubt CRAG helps generate more accurate factual information out of the knowledge base, but still, Some Challenges remain for the widespread adoption of CRAG:

- Retrieval quality depends on Comprehensive Knowledge coverage.

- Increased computation cost and latency compared to basic models.

- The framework is sensitive to Retriever limitations.

- Balancing between fluency and factuality is challenging.